Amazon S3

You can load data files hosted on Amazon S3 using the Amazon S3 Connector.

For more information, refer to the data connector user guide.

Parquet and ORC files can be loaded into Studio through the S3 connector.Currently, these files must be processed as Hex Tiles. Learn how

Configuring Amazon S3 Connector

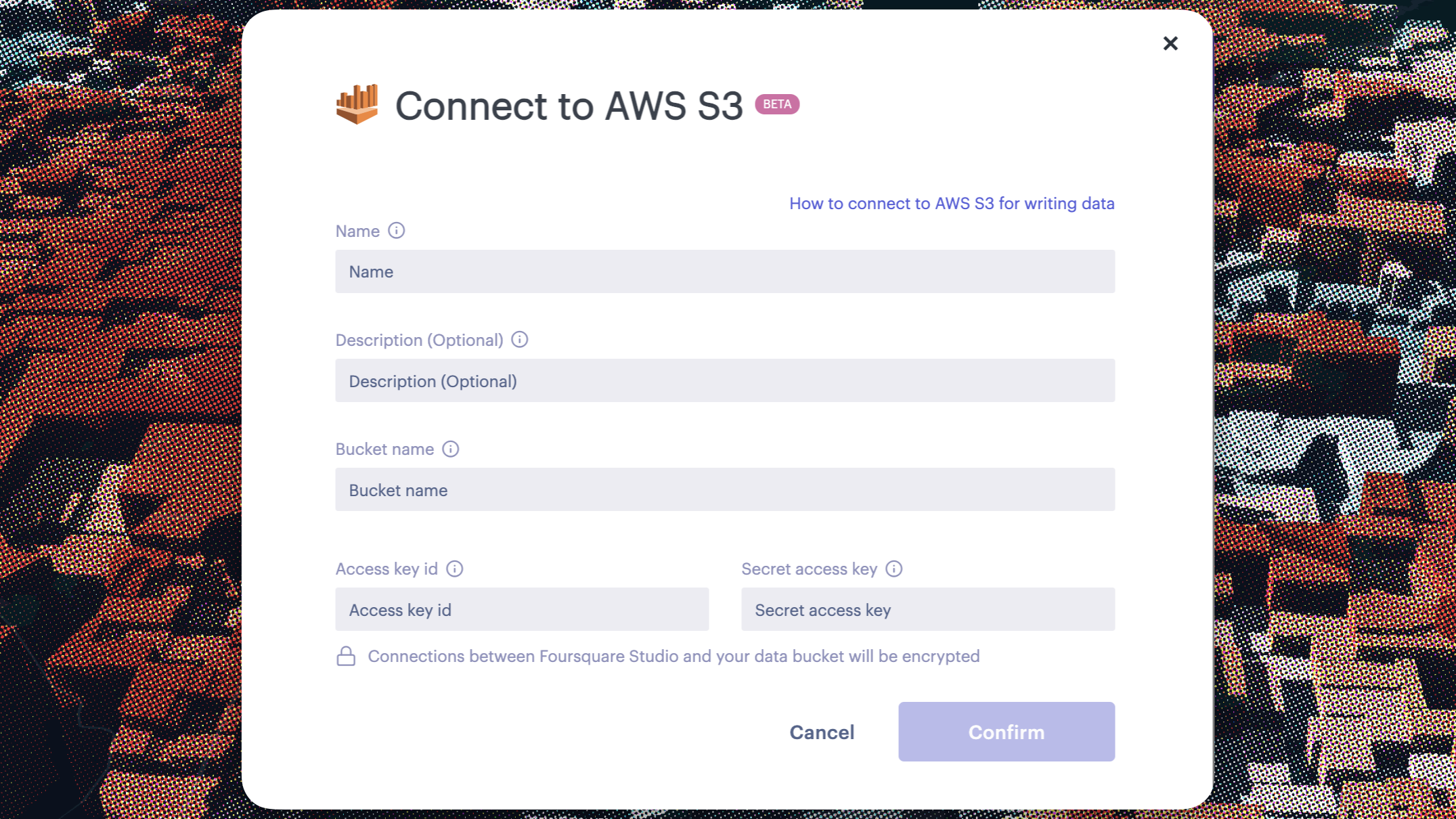

When adding an Amazon S3 Data Connector you will be presented with a form enabling you to configure the connection.

The AWS S3 connector form.

The form contains the following fields:

| Field | Description |

|---|---|

| Name | Write a name for the connector. This is how the connector will appear to you and your organization. |

| Description | Optional. Choose a description for the connector. |

| Bucket Name | The S3 bucket name associated with this data connector. |

| Access Key ID | Your AWS access key. See below for retrieval instructions. |

| Secret Access Key | Your AWS secret access key. See below for retrieval instructions. |

After all required fields are populated correctly, the Confirm button will be enabled.

Click Confirm to create a new Amazon S3 connector.

Finding Access Key ID and Secret Access Key

To find your Access Key ID and Secret Access Key:

- Go to AWS Amazon and login to your AWS Management Console.

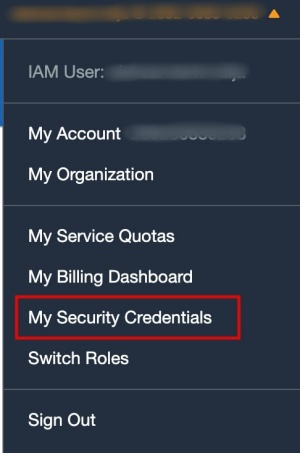

- In top right corner click on your profile name and from drop down menu select My Security Credentials.

Security Credentials Menu

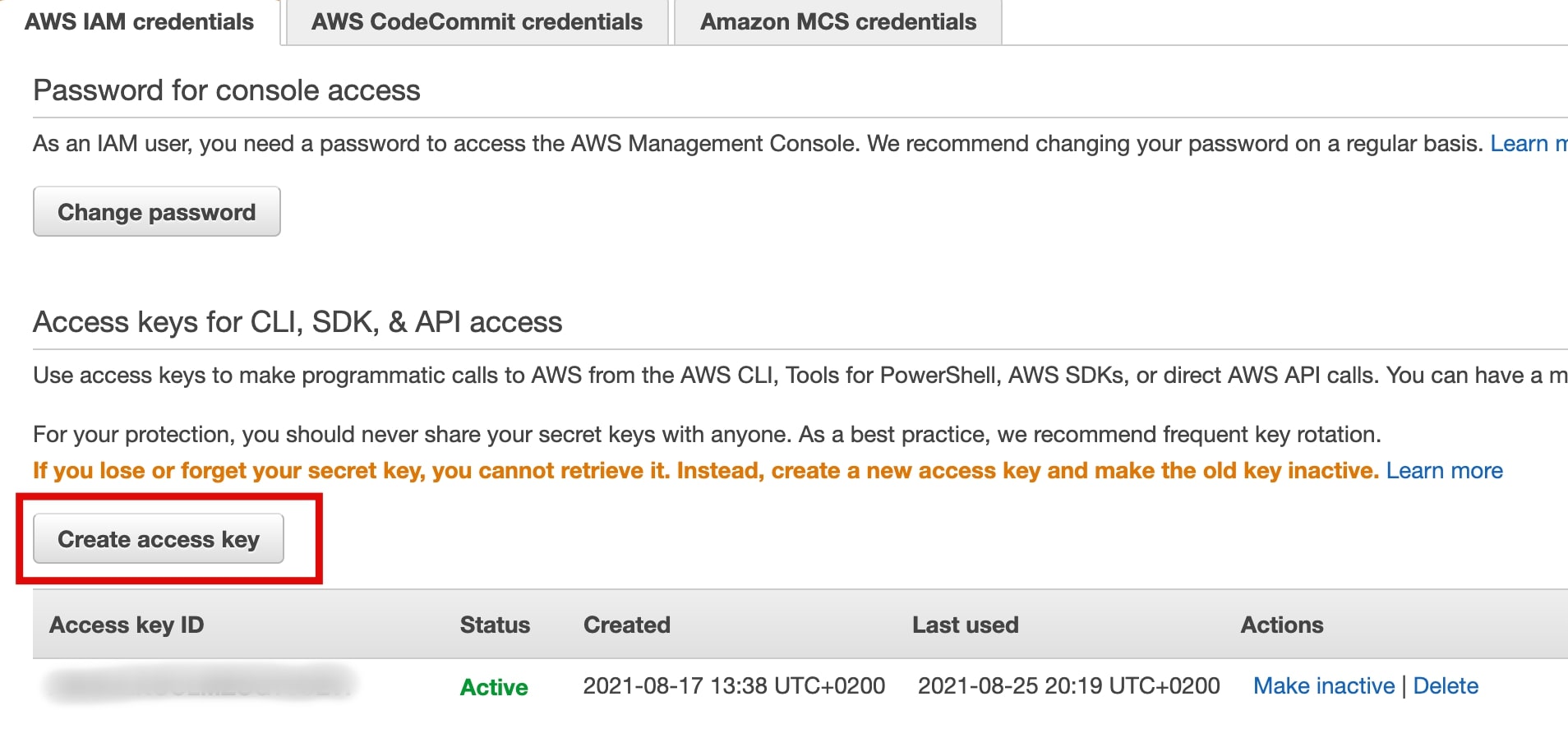

- Window AWS IAM credentials will be opened. If you don’t already have an access key created, click on ‘Create access key’.

Create Access Key

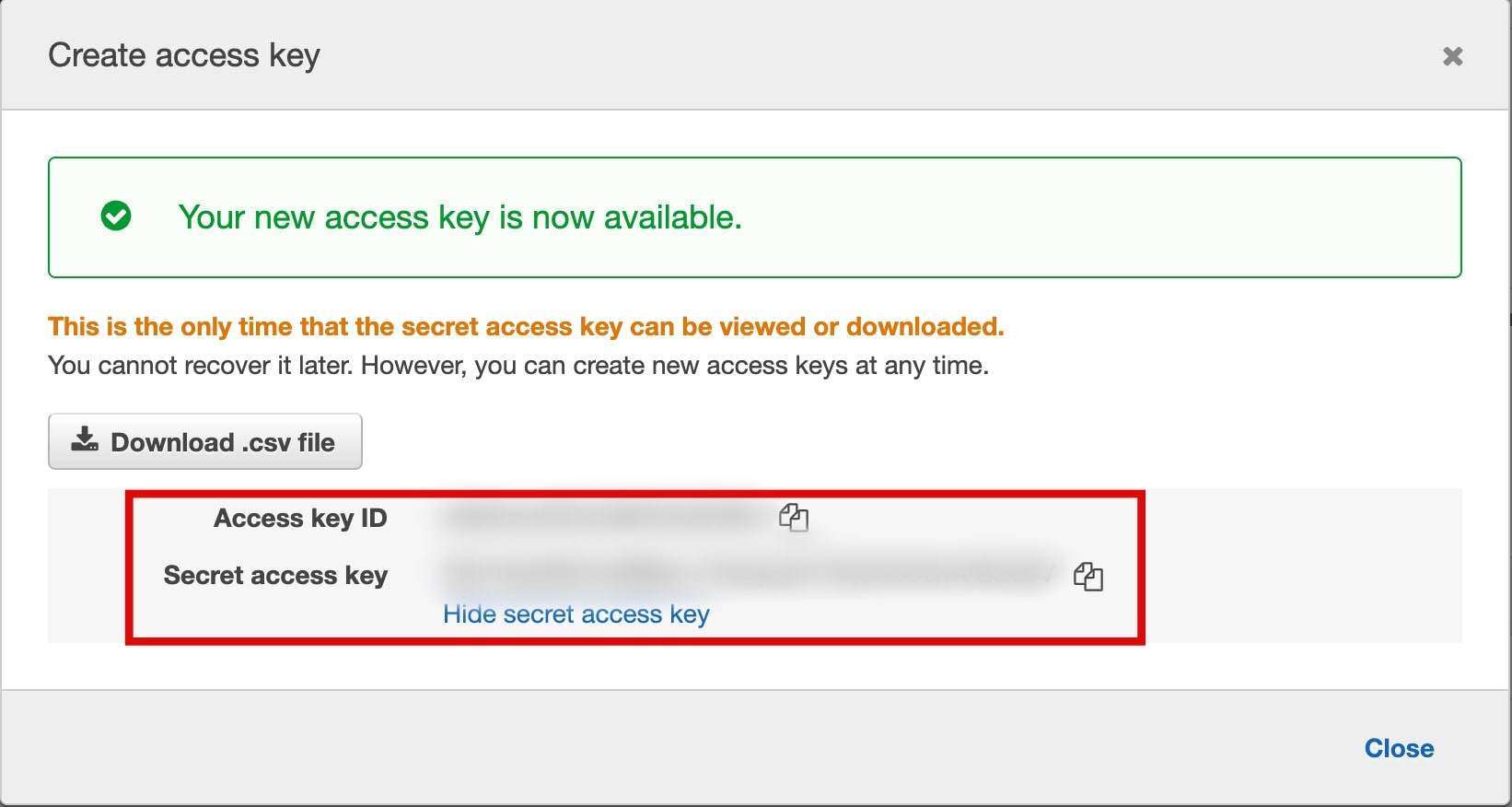

- After clicking on Create access key, access key ID and secret access key will be created. Copy values and paste it in required fields in Amazon S3 Connector form.

Created Access & Secret Key

Loading Parquet and ORC Files

Once you have created the data connector, you can create datasets that reference this connector for their access to S3. At present this must be done through our Data SDK or REST API, though we expect to allow creation of these datasets through the UI in the future.

1. Retrieve your data connector's ID

The easiest way to find the ID is through the Data SDK or API:

# List data connectors associated with user account

data_connectors = data_sdk.list_data_connectors()fsq-data-sdk list-data-connectorscurl -X GET https://data-api.foursquare.com/v1/data-connections HTTP/1.1If the ID is from your organization, you must pass the organization flag:

# List data connectors associated with organization

data_connectors = data_sdk.list_data_connectors(organization=True)fsq-data-sdk list-data-connectors --organizationcurl -X GET https://data-api.foursquare.com/v1/data-connections/for-organization HTTP/1.12. Create a new external dataset associated with the data connector

External datasets made in this manner will not yet load directly in Studio, even for smaller files, though we expect to be able to load datasets like this in the future.

Note: The S3 URL can include glob paths. If you have a multi-part dataset, use a url like s3://my-bucket/path/to/data-*.parquet (requires all files to have the same column schema).

Create an external dataset, then copy its UUID (included in the function's return).

data_sdk.create_external_dataset(

name = "test-external-dataset",

description = "my s3 dataset",

source = "s3://my-bucket/path/to/data.parquet",

connector = "<data-connector-uuid>"

)fsq-data-sdk create-external-dataset --name 'My S3 Dataset' --source 's3://my-bucket/path/to/data.parquet' --connector-id <SOME_ID>curl POST 'https://data-api.foursquare.com/catalog/v1/datasets' \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer <TOKEN>' \

--data '{

"name": "My S3 Dataset",

"type": "externally-hosted",

"metadata": {

"source": "s3://my-bucket/path/to/data.parquet"

},

"dataConnectionId": "<SOME_ID>"

}3. Create Hex Tiles

With the UUID from the external dataset, create Hex Tiles using either the Hex Tile creation UI, the Data SDK, or the REST API.

Note: Parquet (and possibly ORC) files, because of their strong data types, may throw errors if you do not specify the output types of your columns.

data_sdk.generate_hextile(

source="<UUID of the external dataset>",

source_time_column = "time",

source_lat_column = "lat",

source_lng_column = "lon",

finest_resolution = 9,

time_intervals= ["HOUR"],

output_columns= [

{

"source_column": "precip_kg/m2",

"target_column": "precip_sum",

"agg_method": "sum"

}

]

)curl -X POST https://data-api.foursquare.com/internal/v1/datasets/hextile \

-H 'Authorization: Bearer <token>' \

-H 'Content-Type: application/json' \

--data-raw '{

"source": "<UUID from external dataset",

"sourceHexColumn": "hex",

"sourceTimeColumn": "datestr",

"timeIntervals": ["DAY"],

"targetResOffset": 4,

"outputColumns": [

{

"sourceColumn": "metric",

"targetColumn": "metric_sum",

"aggMethod": "sum"

}

]

}'Your Parquet/ORC files will be stored on your Studio account as Hex Tiles.

Updated 22 days ago

Enterprise feature. Contact us to learn more.

Enterprise feature. Contact us to learn more.